OpenAI Releases Two Open-Source AI Models That Performs on Par With o3 o3-Mini

OpenAI just dropped a bombshell on the AI world, unleashingtwoopen-source AI models after a long hiatus! Think of it as OpenAI ripping a page from their own playbook, harking back to the GPT-2 days of 2019. These aren’t your run-of-the-mill models either. Meet gpt-oss-120b and gpt-oss-20b, boasting performance that rivals OpenAI’s own o3 and o3-mini powerhouses.

Built with the cutting-edge “mixture-of-experts” architecture, these models have been put through the wringer with rigorous safety training. Ready to tinker? The model weights are live and available for download on Hugging Face. Time to get your hands dirty and see what you can build!

OpenAI’s Open-Source AI Models Support Native Reasoning

Sam Altman, OpenAI’s CEO, dropped a bombshell on X (formerly Twitter): the release of “gpt-oss-120b.” Altman boasted the model tackles tough health questions, going toe-to-toe with “o3.” The best part? Both models are live on OpenAI’s Hugging Face page, ready for you to download and experiment with the open weights locally.

OpenAI’s models, accessible via their Responses API, power dynamic agent workflows, unlocking capabilities like web searches and Python code execution. Their built-in reasoning provides a transparent thought process, tunable for either pinpoint accuracy or lightning-fast responses.

Under the hood, these models leverage a Mixture of Experts (MoE) architecture, a clever design that boosts processing efficiency by selectively activating only a fraction of their total parameters. Think of it like this: the gpt-oss-120b, boasting a hefty 117 billion parameters, only brings 5.1 billion of those to bear for each token it processes. Its smaller sibling, the gpt-oss-20b (with 21 billion parameters total), activates a mere 3.6 billion. And the best part? Both handle massive content streams up to 128,000 tokens long.

Harnessing predominantly English datasets, these open-source AI models excel in STEM disciplines, coding, and general erudition. To refine their abilities, OpenAI employed reinforcement learning for agile fine-tuning post-training.

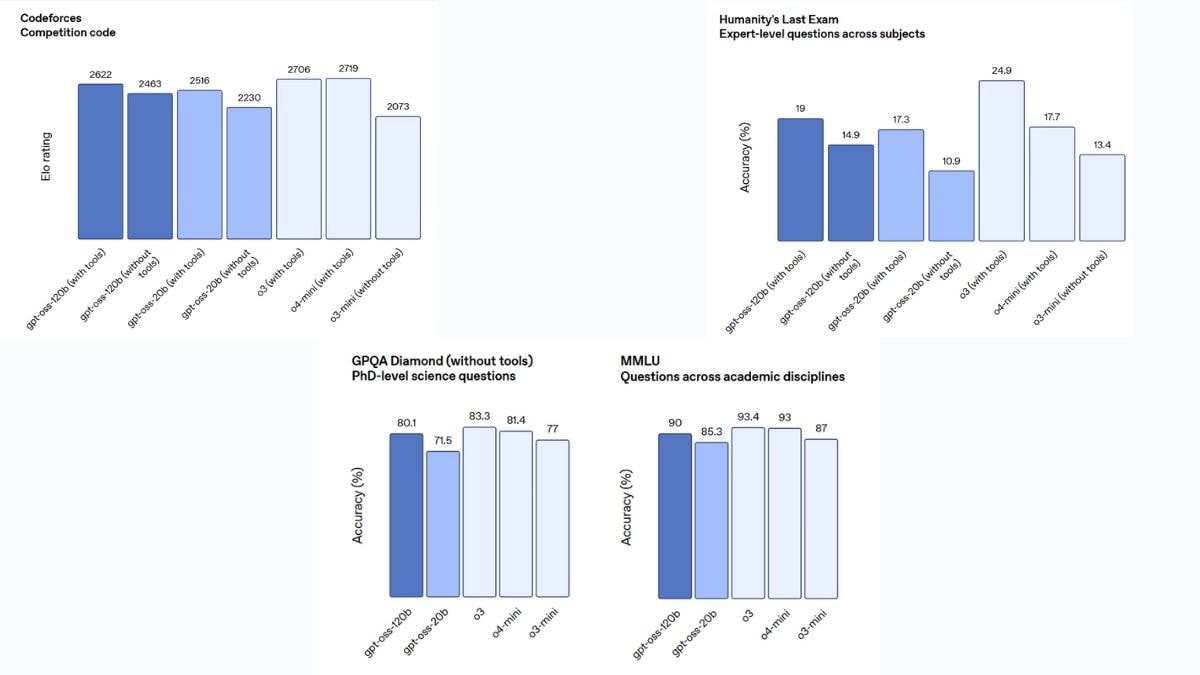

Benchmark performance of the open-source OpenAI models Photo Credit: OpenAI

GPT-OSS-120B: A Rising Star?

Our internal tests reveal GPT-OSS-120B is a force to be reckoned with. It leaves O3-Mini in the dust on critical tasks: crushing Codeforces competitions, acing MMLU and Humanity’s Last Exam’s problem-solving, and mastering TauBench’s tool calling challenges. However, the crown isn’t quite secured. In the broader benchmark arena, particularly the notoriously difficult GPQA Diamond, O3 and O3-Mini maintain a slight edge, hinting at areas ripe for future advancements.

OpenAI fortifies its AI creations with rigorous safety protocols. Before the models even hit the ground running, they’re scrubbed clean of dangerous data, specifically anything related to chemical, biological, radiological, and nuclear threats. Think of it as a digital hazmat suit. But the safeguards don’t stop there. OpenAI employs specialized methods to shield the models from bad actors, ensuring they rebuff unsafe requests and are impervious to sneaky prompt injections. It’s AI security at its finest.

OpenAI assures users that, despite its open-source nature, safeguards have been baked into its models, preventing malicious users from tweaking them to generate harmful content.

Thanks for reading OpenAI Releases Two Open-Source AI Models That Performs on Par With o3 o3-Mini