Zai Releases GLM-45 and GLM-45-Air Open-Source Agentic AI Models

Z.ai, a Chinese AI lab, just dropped two open-source language models that are poised to shake up the field: GLM-4.5 and GLM-4.5-Air. These aren’t your average language models; Z.ai is touting them as their most advanced yet. What makes them special? They’re built for both lightning-fast responses and complex problem-solving, switching between a “thinking” mode for intricate tasks and a rapid-fire mode for instant answers. Plus, they’re designed with agentic capabilities, hinting at a future where AI can proactively take action. The bold claim? Z.ai believes these models are now the top dogs in the open-source AI world.

Z.ai Introduces Open-Source GLM-4.5 AI Models

Forget juggling specialized AI models! A Chinese AI firm just dropped GLM-4.5, a “generalist” LLM designed to mastereverything. Fed up with Google, OpenAI, and Anthropic’s “one-trick pony” AI, they’re claiming GLM-4.5 “unifies” diverse skills into a single, powerhouse model. Is this the end of AI specialization?

Unleash the Power Within: GLM-4.5 and its Agile Air Companion

Imagine an AI with 355 billion potential synapses firing, a digital brain capable of feats once confined to science fiction. That’s GLM-4.5, but the real magic lies in its 32 billion actively reasoning parameters. Not to be outdone, its sibling, GLM-4.5 Air, packs a punch with 106 billion total parameters, 12 billion of which are actively driving its intelligence.

These aren’t just large language models; they’re unified powerhouses. Reasoning, coding, and acting are seamlessly woven into a single architecture. Forget juggling multiple AI tools – GLM-4.5 integrates it all. And with a massive 128,000-token context window, it can grasp nuances and complexities other models miss. Plus, native function calling means it can interact with the real world, turning ideas into action with unprecedented ease.

Z.ai supercharged its model with a mixture-of-experts (MoE) architecture, a move designed to slash compute costs during training and deployment. But here’s where they zig instead of zag: Unlike DeepSeek-V3, which beefed up MoE layers with wider dimensions and more experts, Z.ai took a different route with its GLM-4.5 series. They slimmed down the width and cranked up the height, betting that a deeper architecture would unlock superior reasoning prowess.

The company’s blog post unveils the innovative pre-training and post-training techniques used to forge the models from the ground up, offering developers a rare peek under the hood.

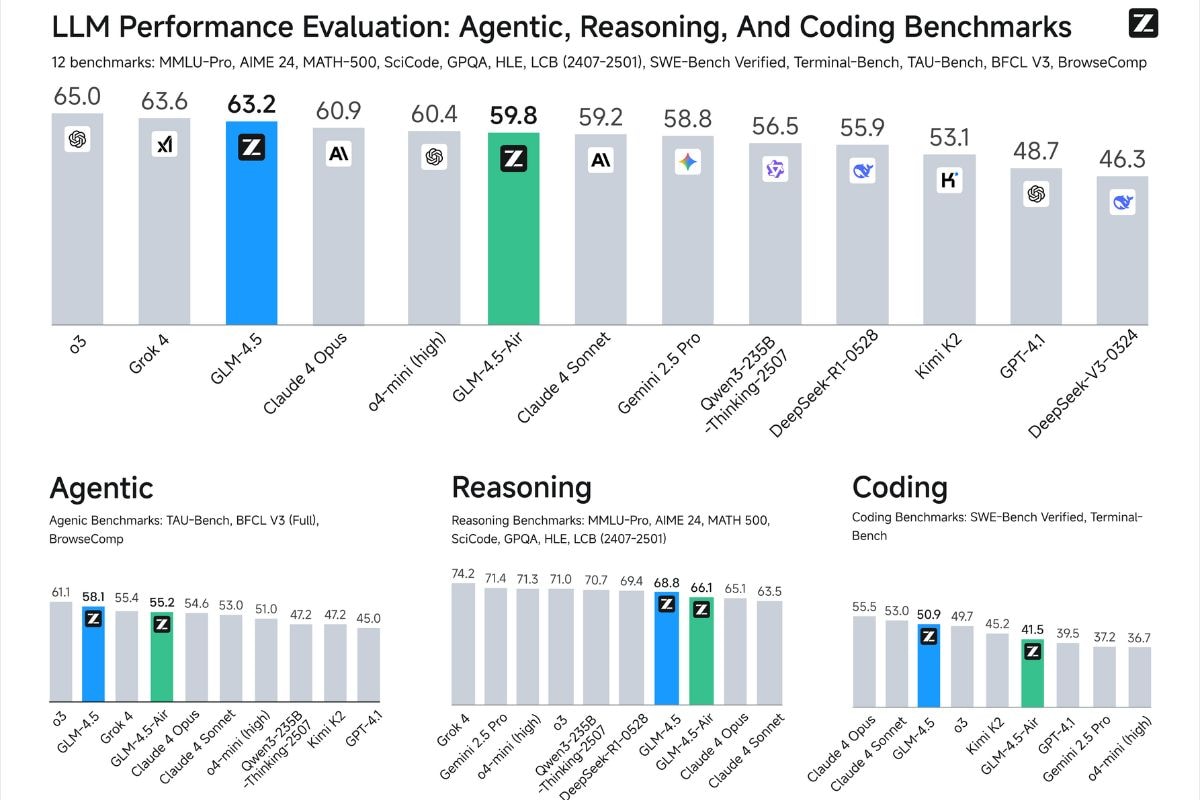

Performance of GLM-4.5 series AI models Photo Credit: Z.ai

Z.ai’s GLM-4.5 has entered the AI ring, claiming a bronze medal finish in an internal performance showdown. The Chinese AI firm pitted its model against heavyweights from OpenAI (o3), xAI (Grok 4), and others, running it through a gauntlet of 12 benchmarks across agentic tasks, complex reasoning, and coding challenges. According to Z.ai’s evaluation, GLM-4.5 landed in 3rd place, suggesting a potential shakeup in the large language model landscape. The question now is: will independent testing corroborate Z.ai’s bold claims?

Unleash the power of these LLMs! Grab the open weights directly from Z.ai’s GitHub and Hugging Face repositories, or seamlessly integrate them into your projects via the company’s website and API. Your AI adventure starts now!

Thanks for reading Zai Releases GLM-45 and GLM-45-Air Open-Source Agentic AI Models